Point & Press

“Today I’ve used my phone to check how long until the bus arrived at my stop. I’ve also used my phone to control the volume of my speakers at home, to learn more about a restaurant before getting inside, and to send a photo I took with my friend to his phone, next to mine.”

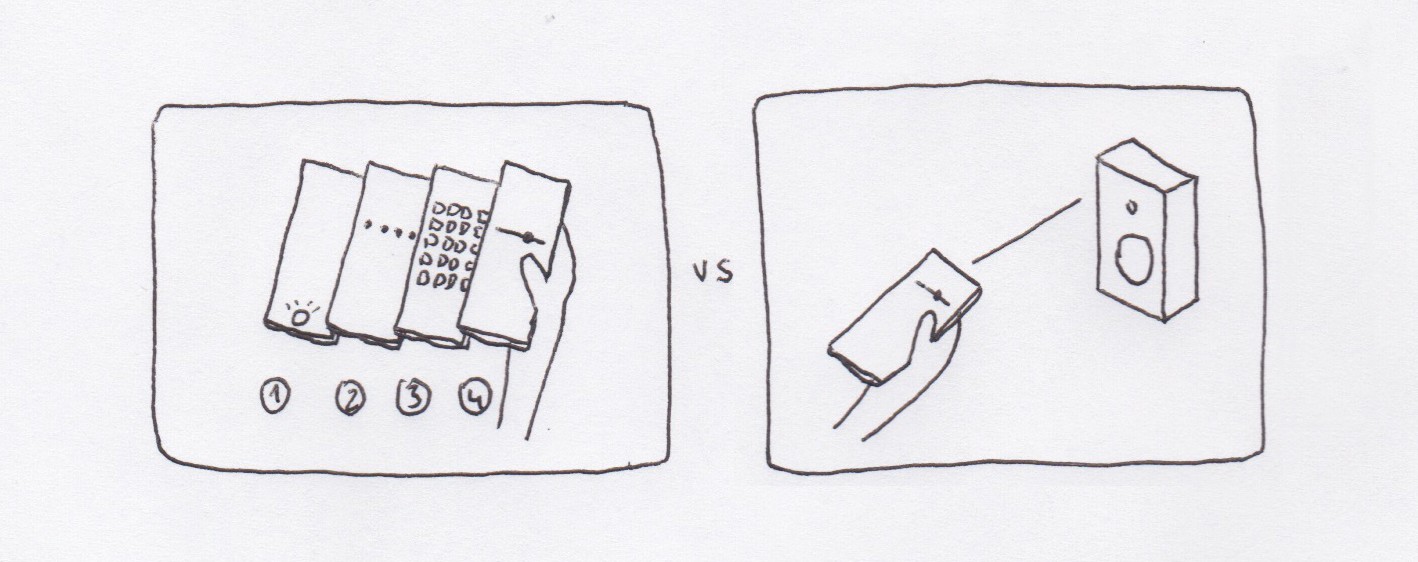

Most of these interactions require unlocking the phone, finding an app, and performing an action. They’re all end-to-end digital interactions, despite that what we want to interact with are things we can touch and see, things we can point at: “I want to interact with *this* thing”.

What if we pointed our phones at those things?

As phones get better at understanding their position and orientation in relation to their surroundings, we may be able to interact with our surroundings in a more intuitive and natural way.

Notes:

- Most of these interactions don’t deal with sensitive information and shouldn’t require user identification.

- You could discover new features / apps by pointing at objects / places.

- With the use of haptic feedback some of these interactions may not even require you to look at the screen.

- Pointing with your phone at other devices may offer different options based on the relationship between the owners: pointing at my computer vs. pointing at a friend’s phone vs. pointing at a stranger’s phone.

Another video sketch I did a few years ago on a similar topic: Spatially Aware Devices