Generative photography

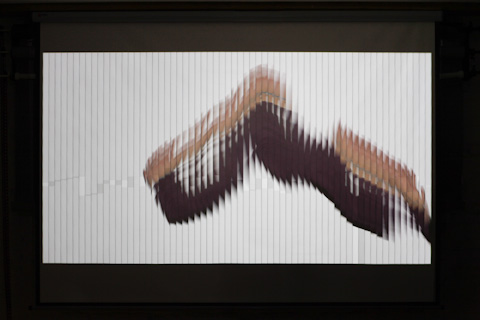

The picture above has been generated projecting white vertical rectangles, from left to right, at 25fps, to a projection screen. A camera, set to long exposure, captured the projection in 5 seconds. The rectangles aren’t homogeneous due to the rendering and the asynchrony between the frame rate of the video signal and the refresh rate of the projector.

The light grey rectangles have been in projected (and thus, exposed) double time than the dark grey ones. The brightest stripe has probably been projected three times the dark grey ones, and there is a rectangle that hasn’t been projected.

I’ve been doing some experiments using Processing to generate different patterns and sequences, a projector, and a camera pointing to the projection screen. Some of them are using a technique called procedural light painting, some other combining slit-scan with projected patterns. I’m also very interested in the low repeatability of some of these experiments, like the picture above, due to the noise introduced by the asynchrony of generation, communication and output means. Maybe we can call it Generative Photography.

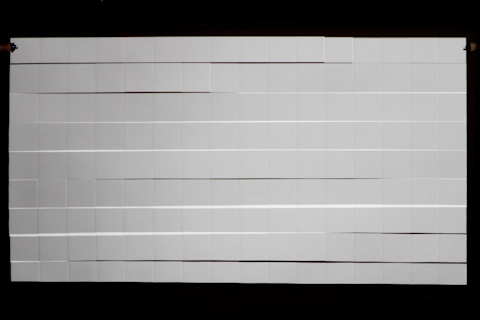

The following pictures are generated projecting a vertical lines, one after the other, and then the same with horizontal lines (25 fps). Lines have 3-pixel stroke, and move 4 pixels each time, creating a double exposure every two lines. Plus the error introduced by the asynchrony.

At 18 fps:

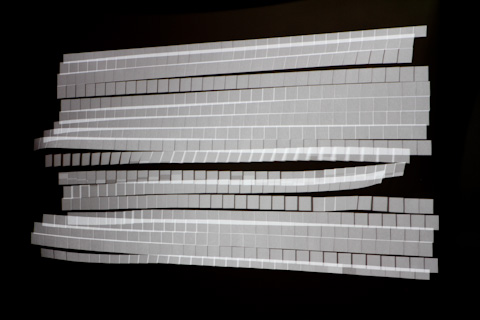

Projecting vertical rectangles instead of lines:

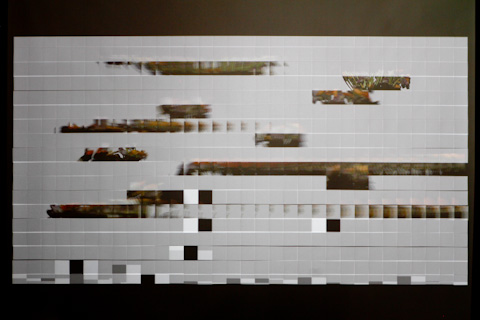

The following pictures are generated projecting white squares sequentially, from left to right and top to bottom. The effect of tiled wall is due to a small movement of the projection screen – the edges od the squares don’t match perfectly, creating overexposed areas (the white ones) and non-exposed areas (the black ones):

At 32 fps lots of squares are missed because the asynchrony:

It’s interesting to play with two technologies that have a specific rate of refresh and communication (sketch and projector), creating this asynchrony, but being able to capture the whole result using the long exposure of the camera. Most of the times there are unpredictable results, even after seeing the projection on the screen. There are squares that are generated but not projected. There are squares that are projected but the naked eye doesn’t see.

Adding some perspective to capture more volume:

Using color squares:

Squares with stroke, no fill:

Vertical and horizontal lines, sequentially:

The following pictures are 1 second exposure – 0,5 seconds projecting white concentric circles and 0,5 seconds projecting the complementary image, so overall the whole surface is covered with light. In the first one I’m moving after the first projected image, so the second set of concentric circles don’t catch me in the same position – it feels like I’m behind them.

In the next experiment I’m projecting one circle each frame (at 25 fps), increasing the radius in 1px each frame. Different objects are placed on the line of light.

Changing the position of the projection screen the projected circles overlap and over-expose some areas, or leave some areas without exposure, creating an amazing effect. I used a 3-pixel stroke weight line – if the movement of the canvas is fast, it gets a bit blurry since areas are exposed 3 times in different positions. I did this because using 1-pixel line it wasn’t enough light to have the desired contrast. Next photos are 20 to 30 sec exposure.

The detail of the pattern is quite interesting, created by the pixelated source of light from the projector.

I used also a piece of lycra as projection screen to be able to move the canvas in a more flexible way.

Then it’s easier to pull or push the fabric, or move one of the corners. It’s also interesting to pull and release, capturing the waving of the fabric returning to its stable position.

I took made many other tests with this configuration, all of them resulted to be quite beautiful:

I’ll continue experimenting with this kind of photography. I’m interested in introducing feedback to the projection, so it reacts on what the camera is capturing, in real time.

For more pictures and information about the exposure times, aperture, etc. you can check my Flickr.

Tags: generative, painting, photography, procedural, projector, slitscan

February 21st, 2011 at 12:51 am

[…] This post was mentioned on Twitter by Mitchell Whitelaw, vagueterrain, Dan Winckler, Kyle Phillips, Mayo Nissen and others. Mayo Nissen said: Lovely generative photography experimentation from @ishback http://t.co/GnvtPPw […]

February 21st, 2011 at 3:43 am

[…] Work from Generative Photography. […]

February 21st, 2011 at 12:38 pm

[…] a good explanation of the methodology in the blog post too. See Generative Photography. Via @golan Posted in Art, Code | Leave a […]

October 21st, 2011 at 6:49 am

Thanks for the inspiring and informative post. I’d like to combine some of your ideas with some of the art I am making but I cant seem to find any source code in your post. I think, for artists to subscribe to the creation of “generative photography” as a category (I love the idea), you could get new interested artists started with some code. Not because we don’t want to do work, but because you already did. I could sit down and re-write your code from this description because you’ve communicated it brilliantly, but I don’t have the spark that you had when it appeared to you that you could make this. So my resulting work would carry a different vibration. Of course, you may have posted it somewhere and I just missed it. I any case, I love your work and would love to try the new discipline it implies. Keep publishing, Take care!