Hunting glitches

Wednesday, March 16th, 2011Recently I made some more experiments in Generative Photography. I went a bit deeper on analyzing the glitches caused by the rendering and the asynchrony between the frame rate of the video signal and the refresh rate of the projector. The experiments pursue an artistic exploration to achieve a certain aesthetic outcome more than a research on computer engineering. Thus, how these glitches are generated and why they behave as they do has not been analysed. However, there are some observations in the following lines.

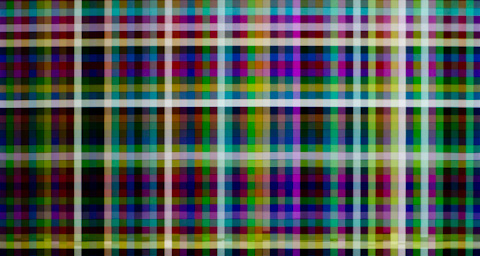

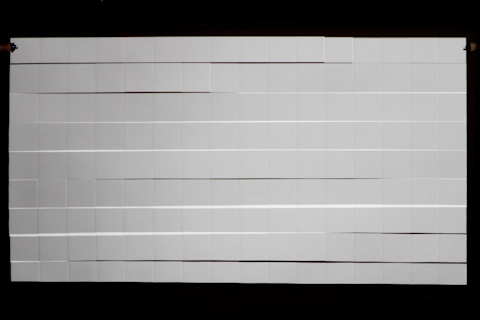

First I tried repeatability, these are three pictures taken consecutively at 10fps:

The glitches never look the same but they are always distributed along a line in the same height of the image. If I restart the sketch in Processing, the position of the glitches change – in this case, the glitches are on the very top of the picture.

And following pictures that were taken after this one have the glitches also in the top. So it seems that there is a relation between the position and the moment the sketch is started. I don’t know much about computer engineering so suggestions on why that happens are welcome. Maybe it depends on the position of the computer’s or graphic card’s clock at the moment of starting the sketch?

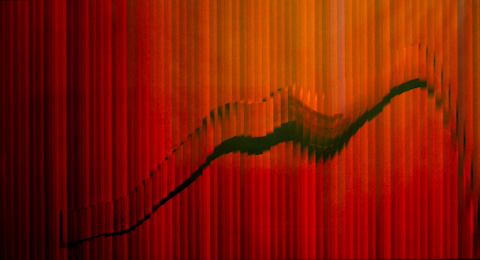

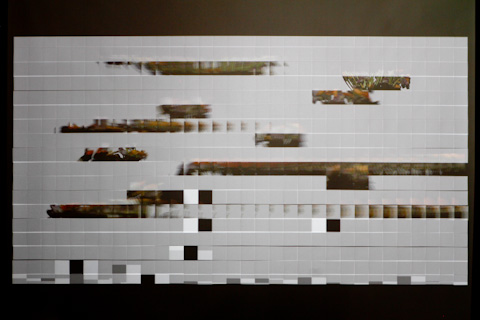

Afterwards I decided to play a movie with Quicktime while running the sketch, to see how the processor’s activity affects the glitches. And it does quite a lot – in these three images taken consecutively it seems that it stabilizes the rendering:

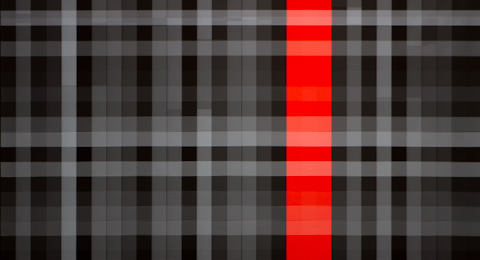

In the next experiment I used the same sketch with different frame rates ranging from 10fps to 100fps. Here some examples, keeping the aperture and ISO constant:

24fps:

25fps:

An example of how much patterns change only adding 1 frame per second.

30fps:

31fps:

32fps:

33fps:

34fps:

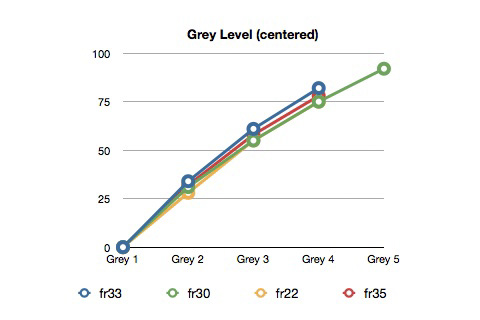

I wanted to know if the exposure time of the different gray shades in a given picture are multiples (so the brightest grey has been projected n times the time the darkest grey has been projected). After slightly blur an image in Photoshop to reduce variability, I measured the four grey levels using the color picker.

I repeated the measurement in four different photographs, obtaining values that I centered and put them on a graph.

The curve kind of adjusts to the logarithmic formula of exposure value. It seems then that the different shades of grey come from multiple units of time exposure (e.g. 0,2sec, 0,4sec, 0,6sec, etc.).

Another interesting detail, sometimes the glitches are not clean cuts of rectangles but have some tooth, as shown in the picture:

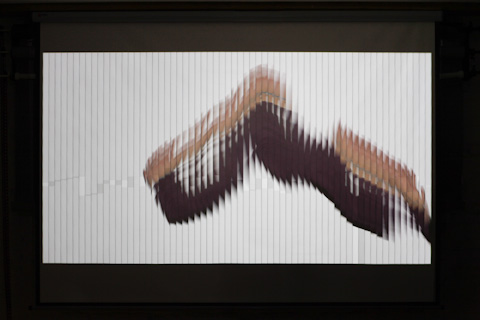

The following are a few examples of some pictures created with this technique.

Colored rectangles with overexposed and underexposed bits. Probably none of the two colors that differ in luminance are the color that was projected since it depends on the time exposure.

Vertical and and horizontal rectangles projected sequentially:

Circles with huge radius with a random component that make them overlap creating overexposed areas (in yellow):

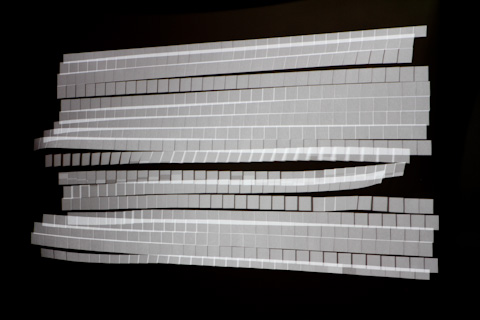

Stripes with color variation, following the projected sequence with a white surface to paint create relief:

Combination of different patterns triggered manually, projected at high framerates, adding a certain degree of randomness to specific variables:

Same principle as the rectangles from the top of the post, in concentric circles:

To conclude this post, a screenshot I took while designing Generative Photography’s website. Talking about digital glitches:

More pictures in the website of the project: www.generativephotography.com