Flaps: fast and contextual browsing

November 11th, 2012 by ishbackWeb browsing is probably the main activity we use computers for today. Tabs have been a universal standard in web browsers, helping a wide range of users to navigate the web. Tabs provide a visual representation of the active webpages, waiting to be processed: either read them, discard them, keep them for later, or archive. Lately I’ve been trying to question if tabs are the best way to navigate web content.

The way tabs are sorted is a combination of time (new tabs open at the right end of the tabs bar) and source (new tabs from links coming from a specific webpage will sit next to that webpage’s tab). This two sorting strategies combined with the flat visual representation of the tabs doesn’t help navigating them, specially when the tab bar is cluttered and web titles and/or icons are hidden.

If we take the tabs metaphor back to its origin, while organising paper documents we used to write names on the folders, use coloured folders or use different drawers. Tabs on the browser are ethereal and don’t require such a structure, although they could potentially organise themselves understanding the typology of webpage they host. Tabs could be organised by type of content (media, personal, social, etc.) or use (in focus / in background, one time / frequent access, etc.)

Tabs are designed for ‘point and click’ in order to navigate through them. Pointing at things requires shifting the focus of attention and it usually slows down the interaction.

In order to understand how browsing could be improved, I analysed some of the behaviours I have developed while browsing (I use Chrome):

– I usually don’t look at the address bar when launching websites or performing searches. The shortcut to open a new page (Alt+T), Chrome’s omnibox, and the autocomplete converted the bar to an invisible interface. I think about reading the news and the news website appears on screen, putting zero effort on thinking how to get there.

Also I have noticed that I normally don’t keep open those webpages I visit frecuently. I normally create a new tab and launch the webpage, do what I need to do and I close it right after to come back where I was. One of the reasons I unconsciously develop this behaviour is that it’s faster and less disrupting to create a new tab (alt+T, type first character, Enter – 0.25sec max) than activate a tab that is already open (find the tab, point, click, refresh – 1sec + change of visual focus + potential procrastination). Switching between tabs follows the same reasoning.

– I pause/resume the music streaming many times a day and it still takes two or three steps to do so each time. Likewise it takes many steps to save an image either to my local or remote repository. Or browse webpages I previously bookmarked. There are many frequent operations that are performed using a generic interface.

– While looking for a specific tab on the tab bar, I sometimes end up checking the news on the way, or my email, or articles that I left open. Having the tabs always and all visible can distract me, although I embrace it and I like it somehow.

Based on the behaviours described above, there are some principles I’d like a browser’s interface to follow:

– Maximise the possibilities for psychomotor automation.

– Mutually adapt with the user and disappear with time.

– Minimise the ‘point & click’ and encourage fast navigation with the keyboard.

– Provide dedicated interactions for operations I perform frequently.

– Provide an adaptive interface that helps to focus, but doesn’t kill procrastination.

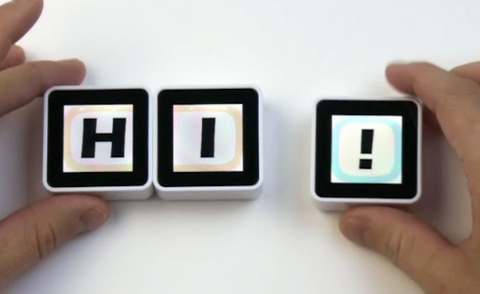

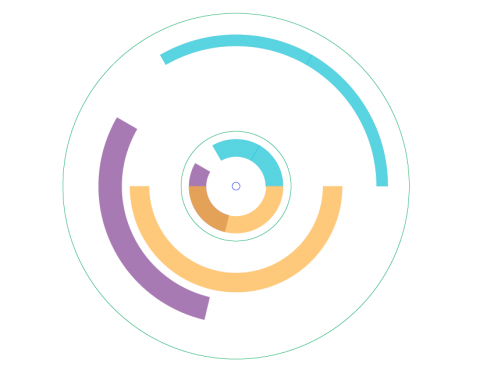

Trying to imagine a browser that follows those principles, I sketched Flaps, a full-screen browser with a minimum visual infrastructure, an interface for contextual navigation and dedicated interactions for optimising frequent actions. (play full screen)

Bits of the interface:

– main interface:

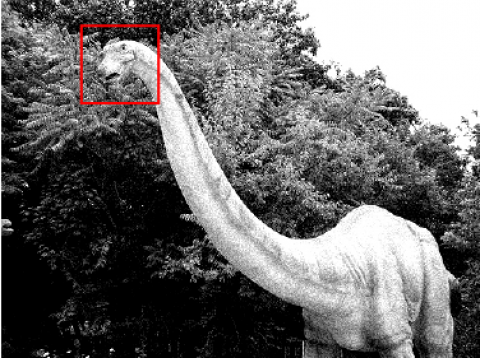

– contextual interface, after opening links from a webpage. When possible, webpage titles are formatted to increase its meaning:

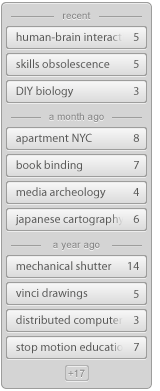

– extended interface, with automatic grouping:

– repository / bookmarks / ‘read it later’ interface:

– example of predefined searches:

– examples of actions over the active website:

Flaps is just a video prototype for now – I’d be curious to let people try it and see how their browsing behaviours would evolve. There are a few aspects that haven’t been tackled in this prototype, that should be taken into account while implementing an interactive prototype (loading progress feedback, history overview, need for full length URL’s, optimise position of the interface for different webpages and screen ratios/resolutions, compatibility with existing keyboard shortcuts, etc.)

Any feedback on the concept, as well as input about personal behaviours and workflows while browsing, is very welcome!